How to distribute services on a 10‑node Raspberry Pi CM5 cluster

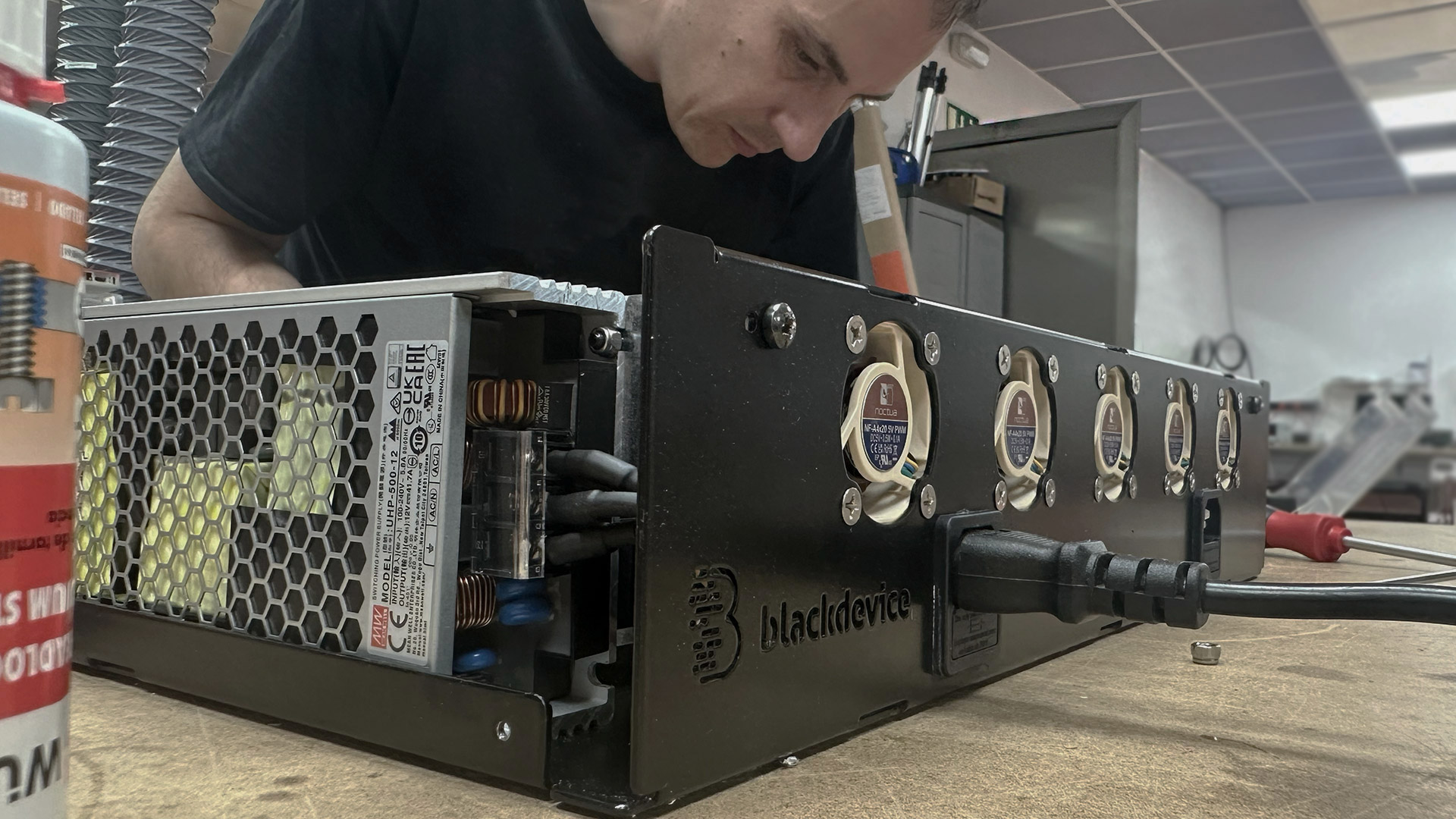

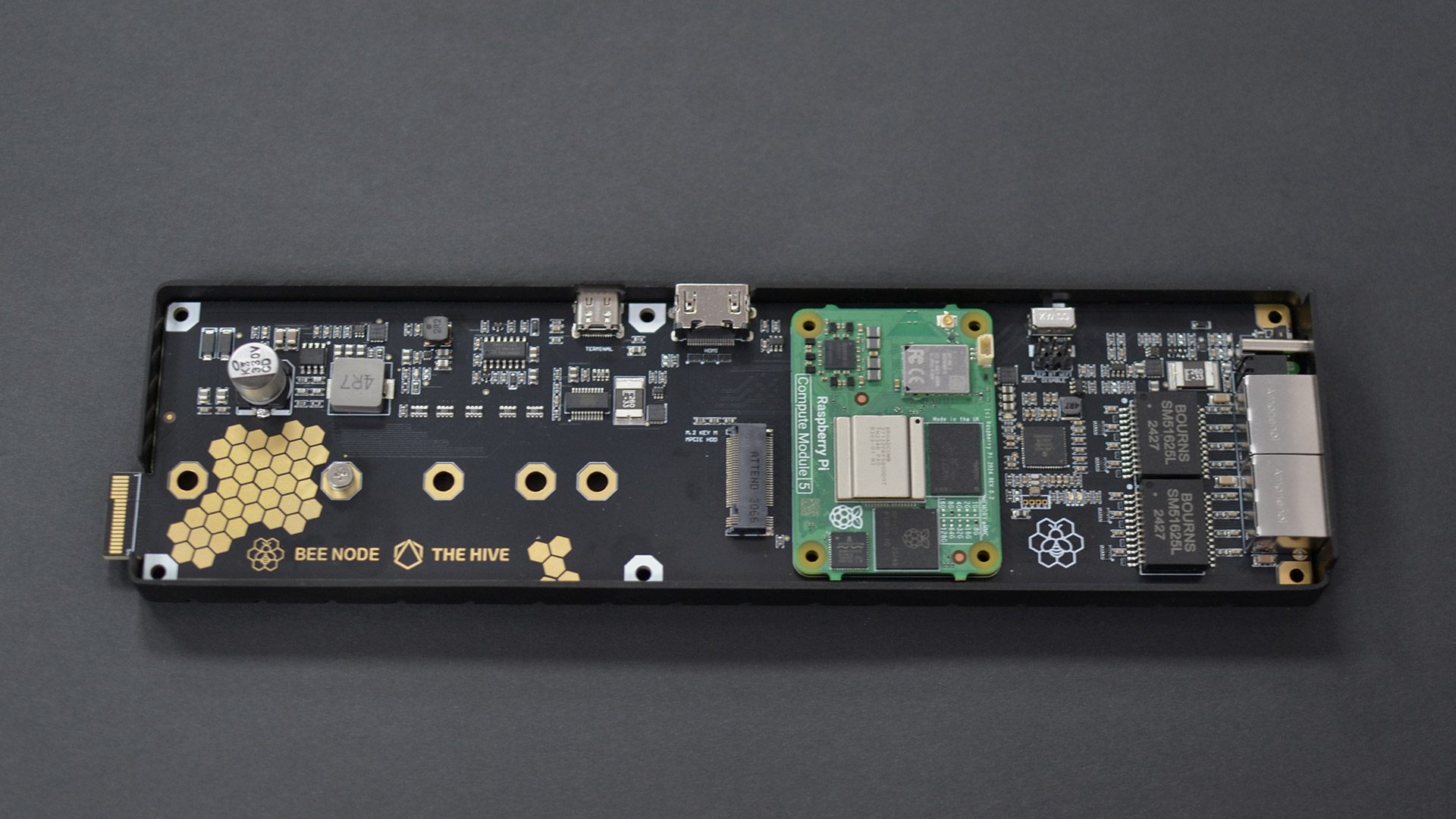

Our ARM Cluster project is moving forward: we already have several “bee nodes” operational in a fully functional prototype, which we showcase in this post and in this video. As we continue developing and producing more units of the updated bee nodes—refining features and fitting them with sleek anodized aluminum enclosures—we’ll soon be able to test them all together and demonstrate their true capabilities!

In the meantime, here are some ideas for services you can run on a 10-node Raspberry Pi CM5 based ARM cluster, perfect for tech enthusiasts, home‑lab experimenters, system administrators and IT professionals who want to trial apps and services before deploying them into production.

Running services on a 10-node Raspberry Pi CM5 ARM cluster isn’t just fun—it’s a hands-on way to simulate enterprise-grade deployments in your own lab.

Here’s how we distribute workloads across our “bee nodes” for maximum efficiency and flexibility.

Your 10‑node ARM cluster: suggested service layout

Below is one possible way to assign services across your 10 Raspberry Pi Compute Module 5 nodes, our bee nodes, leaving one free for future use:

| Node | Service(s) | Brief description |

|---|---|---|

| 1 | GitLab | Code repository, CI/CD pipelines, and private container registry. |

| 2 | PowerDNS RecursorNTP (member)MinIO/FTP | – Resolve DNS without relying on external providers. – Synchronize time across your LAN. – Store and share files locally or externally. |

| 3 | PowerDNS AuthoritativeNTP (member)Rclone | – Host your internal domain (e.g. blackdevice.lan).– Provide redundant time synchronization. – Back up and sync data to cloud or network targets. |

| 4 | Kubernetes control plane + etcd | Coordinates the cluster and stores its state. |

| 5 | Kubernetes worker | Runs containers and microservices. |

| 6 | Kubernetes worker | same as Node 5. |

| 7 | Kubernetes worker | same as Node 5. |

| 8 | NFS Server | Provides persistent shared storage for Kubernetes and VMs. |

| 9 | Portainer Sentry | – Web UI for Docker container management. – Real‑time error monitoring for apps. |

| 10 | Free | Keep this node available for new services or scaling existing ones. |

Nodes in use: 9 Nodes free: 1

What each block brings to the table

- GitLab (node 1)

Centralize your source code, automate tests and deployments, and host your own container images. - Multi‑role services (nodes 2 & 3)

- PowerDNS Recursor & Authoritative: Combine external‑facing DNS resolution with an internal domain server to avoid outages and speed up name lookups.

- NTP Cluster Members: Keep all your devices—servers, switches, IoT gear—perfectly in sync, preventing time‑related errors.

- MinIO/FTP & Rclone: Easily share files on‑premise or sync backups to virtually any cloud or remote storage.

- Kubernetes (nodes 4–7)

- Control plane + etcd (node 4): The brain and state store of your cluster.

- Workers (Nodes 5–7): Deploy containers, APIs, and microservices to test concepts or run lightweight production loads.

- Persistent storage (Node 8)

An NFS server hands out shared volumes to Kubernetes pods and VMs alike—ideal for databases, media libraries, or any data that must survive restarts. - Container orchestration & observability (Node 9)

- Portainer: Manage Docker containers through an intuitive web interface.

- Sentry: Capture and track errors in your applications in real time.

- Spare capacity (Node 10)

Whether you need a dedicated database, AI/ML service, caching proxy, or anything else, you’ve got one node ready to go.

Conclusion

This 10‑node Raspberry Pi CM5 cluster gives you a full‑stack playground—from version control and DNS to Kubernetes, storage, monitoring, and beyond—while sipping power and fitting on a bookshelf. With one node left free, you have room to grow, experiment, and adapt your infrastructure as new needs arise.

Become a HIVE early bird!

If you’re passionate about ARM clusters, home labs, or IoT innovations, join our mailing list to be the first to hear about our upcoming THE HIVE Arm Cluster project updates and launch plans. Let’s build the future of micro‑data centers together!